Goodbye Webmaster Tools, Hello Google Search Console

If you manage your website you may have noticed that Google Webmaster Tools has been renamed Search Console. This is the latest in a series of changes that Google has made to the Webmaster Tools (WMT) admin panel.

For some time now the emphasis of Webmaster Tools has been to provide information to website owners to help rank sites better in Google. When Google first launched Webmaster Tools it was mostly used by webmasters as a way to check that Google was able to index their pages, which is why the sitemap section was so important. However, in recent years it has increasingly been used by SEOs and digital marketing experts, who often have very little technical knowledge, to optimise websites. The name change reflects this shift and also highlights Google’s main objectives for the platform.

Google’s John Mueller explained the reason for the change Google+: “I remember … back when Google Webmaster Tools first launched as a way of submitting sitemap files. It’s had an awesome run, the teams have brought it a long way over the years. It turns out that the traditional idea of the “webmaster” reflects only some of you. We have all kinds of Webmaster Tools fans: hobbyists, small business owners, SEO experts, marketers, programmers, designers, app developers, and, of course, webmasters as well. So, to make sure that our product includes everyone who cares about Search, we’ve decided to rebrand Google Webmaster Tools as Google Search Console.”

John Mueller joined Google following the success of his own GSite Crawler, which was the first successful automated sitemap generator. This was a vital tool in the days before content management systems; when websites consisted of a series of static HTML files the only way to create a list of URLs was to create a tool to analyse the site and report all known URLs.

What is Search Console?

The latest version of the Google Search Console reports website health and performance in search. It is divided into four key areas: Search Appearance, Search Traffic, Google Index and Crawl. The console also advises website managers of any security issues, such as hacking or phishing on a website.

Search Appearance

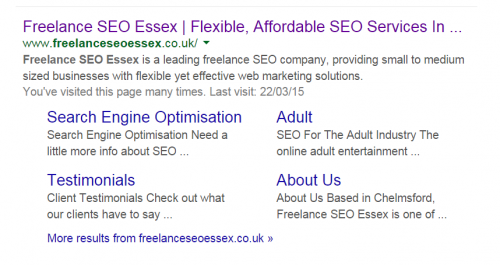

Search Appearance provides information on structured data, site links and also provides HTML improvement suggestions. For example, Site Links allows site owners to view and modify the deep links that Google sometimes shows in search when a person searches for a website by name or brand. Here’s an example; if we wanted to remove one of the site links we can do this in the Search Console:

HTML Improvements reports when important elements are missing or poorly optimised for a page or site. Typical issues reported are missing or duplicate title tags, or title tags that are either too short or too long. The title tag is still considered one of the most important elements in terms of SEO and if a site uses the same title tag multiple times it will dilute the SEO value of each of those pages. This is why it is so important to manage and optimise website content.

Search Traffic

Search Traffic provides information about how people are finding your website. It reports number of impressions in search, number of clicks, CTR and position. Drilling down, you can also view links to your site, internal links and set international targeting.

Two relatively new areas are the Manual Actions section, which will report any instances when Google’s quality control team apply a manual penalty to a website, and the Mobile Usability section, which details problems that affect how a website is displayed on mobile devices. In April 2015, Google started ranking websites based on mobile performance; it is more important than ever to have a good mobile website.

Google Index

Google Index reports overall index status, that is, how many pages are indexed in Google, as well as details of the keywords that are used to find your website.

Crawl

The crawl sections reports on technical errors that affect the way Google “crawls” a website. Google analyses websites by sending its “Googlebot” to crawl, or spider, each page and link on a website. This is similar to the type of crawler that John Mueller created to build sitemap pages with GSite Crawler.

One of the most useful tools in this section is Fetch as Google. This tool allows you to view your website code as Google sees it, or, as is sometimes the case, it will show when Google cannot access a page.

One of the most common SEO errors that we see is when a page or section of a website is set to “noindex”, which means that Googlebot cannot access the pages and therefore does not list them in the search index. This will also allow you to identify how elements in a page, such as those delivered by JavaScript or Flash, are viewed by Google.

The crawl section still includes the Sitemaps tool that allows you to specify an XML sitemap. The sitemap tool now reports on the number of pages submitted in a sitemap and the number of pages indexed by Google. If a site has many pages in the sitemap file that are not indexed, this can indicate a problem that requires the attention of an SEO expert.

If you have not already set up Google Console, get in touch and we will run you through the process and suggest how you can improve the search engine performance of your website.